The early years of the pharmaceutical industry were uneasy and the industry as we know it today did not formally exist. The art of medicine for thousands of years was focused on botanicals and the use of herbs which were distributed from local apothecaries. Some known plants were mass produced but the true beginning of the age of modern drug discovery can be said to start with the isolation of Morphine from Opium by a German apothecary assistant. By the end of the 1800’s, rudimentary organic synthesis was being performed allowing elementary changes in drug molecular structure, the National Institute of Health (NIH) was created and the birth of modern  pharmacology was occurring. The first vaccine was discovered by Louis Pasteur and drugs such as paracetamol, Aspirin, benzocaine and quinine, which was used to treat malaria, were discovered and some were even formulated for public sale and use. By the early 1920’s compounds like barbiturates and adrenaline were being understood, extracted or synthesized and even used for surgery. By the 1930’s following world war 1, antiseptics and antibiotics were starting to enter the world stage with the discovery of Penicillin and the desire for drug treatment throughout the world fuelled a new age of drug research and discovery in what can now be described as the golden age of drug discovery.

pharmacology was occurring. The first vaccine was discovered by Louis Pasteur and drugs such as paracetamol, Aspirin, benzocaine and quinine, which was used to treat malaria, were discovered and some were even formulated for public sale and use. By the early 1920’s compounds like barbiturates and adrenaline were being understood, extracted or synthesized and even used for surgery. By the 1930’s following world war 1, antiseptics and antibiotics were starting to enter the world stage with the discovery of Penicillin and the desire for drug treatment throughout the world fuelled a new age of drug research and discovery in what can now be described as the golden age of drug discovery.

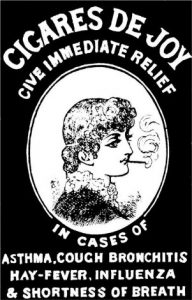

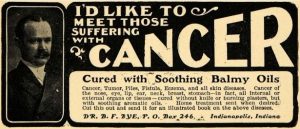

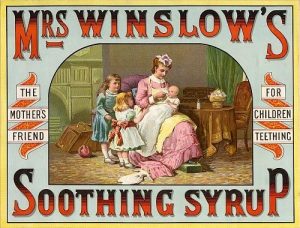

At this point in history, drugs were not effectively tested for safety and medicines were generally produced by small-scale manufacturers. There was little regulatory control over the processes involved or the subsequent advertisement of these products and claims of their efficacy. Pre-1900, there were no laws protecting the public from dangerous drugs. Many medicines that were used to treat certain diseases were outright useless and furthermore posed a risk to health as they could be harmful. Such ‘medicines’ like Benjamin Byes soothing oils to cure cancer or William Radam’s Microbe Killer had absolutely no benefit were, by all means, a scam. Drugs such as Cannabis were common ingredients in many formulations and Morphine, Heroin, Cocaine, and Opium were freely sold to the public without any regulation in regard to what could be written on the container of the medicine or warnings as to the dangers of their use.

common ingredients in many formulations and Morphine, Heroin, Cocaine, and Opium were freely sold to the public without any regulation in regard to what could be written on the container of the medicine or warnings as to the dangers of their use.

The introduction of the First law in history, pertaining to therapeutic drugs, was the original ‘Pure Foods and Drugs Act’ in the United States of America in 1906. It prohibited misbranded foods, drinks, and drugs but was limited in that it only required drugs meet standards for strength and purity. This was still lacking and was later amended to prohibit false claims of therapeutic value intended to defraud the consumers. This law was upheld by the American Chemical Society’s Division of Medicinal Chemistry which in 1930 was renamed the Food and Drugs Agency, commonly known today as the FDA.

The year 1937 saw a tragedy caused by the use of an ingredient in a medicine. A solvent, diethylene glycol, was used in a syrup for a new antibiotic medicine called sulphanilamide. The manufacturers did not research anything about  its safety nor conduct any tests on animals and appraised it solely on its sweet taste, look and fragrance. Its use led to the deaths of more than 100 people, mainly children. In 1938 a new bill was introduced, ‘the Food, Drug, and Cosmetic Act’, which introduced the first regulations of their kind on medicines which included safe labelling of medicines, set tolerances on unavoidable poisonous substances, the introduction of factory inspections and for the first time in history a requirement that medicines had to be proven to be safe for their use. This was later amended to introduce new labeling which required certain drugs to be sold with prescription only. This was the dawn of the age of prescription medication.

its safety nor conduct any tests on animals and appraised it solely on its sweet taste, look and fragrance. Its use led to the deaths of more than 100 people, mainly children. In 1938 a new bill was introduced, ‘the Food, Drug, and Cosmetic Act’, which introduced the first regulations of their kind on medicines which included safe labelling of medicines, set tolerances on unavoidable poisonous substances, the introduction of factory inspections and for the first time in history a requirement that medicines had to be proven to be safe for their use. This was later amended to introduce new labeling which required certain drugs to be sold with prescription only. This was the dawn of the age of prescription medication.

Prior to this clinical research was practically non-existent. The first trial of any sort regarding health was believed to be by the king Nebuchadnezzar of Babylon in the year 500 B.C and did not follow any particular approach and provided the comparison of the effects of a vegetable-filled diet against a meat and wine diet. The Persian ibn Sina, known as Avicenna in the west, was a scientist of the Islamic Golden Age and provided us with the first contemporary approach to clinical trials. In his famous book, the Canon of Medicine, he provides us with several conditions for the testing of drugs and states “Experimentation will bring us a complete understanding of the strength of drugs; however, only if the conditions below are followed”. It was 700 years later that the first recognized clinical trial took place with James Lind and his scurvy trial in 1747, where he compared treatments for scurvy and noted their effects.

The ethics of today in medical studies were absent and there were no formal laws against immoral experimentation. A prime example was ‘The Tuskegee Syphilis Study’, in years 1932 to 1972, in which 300 African-American men with  Syphilis were studied to understand the disease progression and were even denied access to Penicillin, a known treatment cure for the disease. This lead to the spread of the disease to others and many children were born with syphilitic congenital abnormalities. Following World War 2, in which the Nazi regime conducted abhorrently cruel human experiments and harbored an active Eugenics programme inspired by an American movement involving sterilization of individuals with undesirable characteristics, in the year 1947 at the trial of Nuremberg, a new document called the Nuremberg Code set precedent for the ethics of modern clinical trials. Astonishingly, this was initially rejected by western medical practitioners and the issue of informed consent seemed to be the most troublesome predicament for physicians.

Syphilis were studied to understand the disease progression and were even denied access to Penicillin, a known treatment cure for the disease. This lead to the spread of the disease to others and many children were born with syphilitic congenital abnormalities. Following World War 2, in which the Nazi regime conducted abhorrently cruel human experiments and harbored an active Eugenics programme inspired by an American movement involving sterilization of individuals with undesirable characteristics, in the year 1947 at the trial of Nuremberg, a new document called the Nuremberg Code set precedent for the ethics of modern clinical trials. Astonishingly, this was initially rejected by western medical practitioners and the issue of informed consent seemed to be the most troublesome predicament for physicians.

In the year of 1958, a time wherein the U.S up to 1 in 7 people regularly used sleeping pills, a new drug called Thalidomide, created by the German company Chemie-Grünenthal and launched as a product called “Contergan”, was widely advertised and prescribed. Initial studies were positive in that it showed an apparent lack of toxicity and it did not cause dependence like the only other known sleeping medications of the time, the Barbiturates and Benzodiazepines. Furthermore, it was believed to be extremely safe as it did not cause death in suicide attempts. During this period, the effects of drugs on pregnancy were quite well documented and increasing published work showed that fetuses could be deformed by external influences, including poisoning and therapeutic drugs. The effects of diseases such syphilis and rubella on the fetus was known and acted upon and there were many studies published proving the teratogenic effects of drugs, but for some unknown reason, this was not acted upon and drugs which are now prohibited for use in pregnancy were still freely prescribed for pregnant women. The effect of alcohol on pregnancy was also well known and documented throughout history but not acted upon and further proved the medical communities’ denial of the effects of drugs in pregnancy.

Shortly after the release of Thalidomide, it was found to help patients with morning sickness associated with pregnancy and was frequently prescribed to pregnant mothers. Little did the prescribing doctors know they were fuelling what is now known to be the worst medical disaster in history. There was the concern in some quarters that  there may be an association with birth defects, however, the first report of an association with Phocomelia was not until November 1961. Phocomelia is a birth defect in which the arms and legs are grossly undeveloped with the hands and/or feet very close to the trunk of the body. Within a year between 10000 to 12000 children across the world were born with this devastating life-long condition. Despite this tremendous tragedy, the U.S. government recognized the need for more stringent control of medicines entering the market and the Kefauver-Harris Drug Amendments Act of 1962 was introduced. From then on, among other ground-breaking protective rules, manufactures of medicines had to prove that their medicines were not only safe but were effective but also had to publish a list of possible side effects.

there may be an association with birth defects, however, the first report of an association with Phocomelia was not until November 1961. Phocomelia is a birth defect in which the arms and legs are grossly undeveloped with the hands and/or feet very close to the trunk of the body. Within a year between 10000 to 12000 children across the world were born with this devastating life-long condition. Despite this tremendous tragedy, the U.S. government recognized the need for more stringent control of medicines entering the market and the Kefauver-Harris Drug Amendments Act of 1962 was introduced. From then on, among other ground-breaking protective rules, manufactures of medicines had to prove that their medicines were not only safe but were effective but also had to publish a list of possible side effects.

In an age of emerging scientific understanding and transition from an era of medical misinformation to the crude framework of the laws, regulations and scientific knowledge which protect and prevent harm to citizens of the world today, arose a medical catastrophe. The Thalidomide tragedy will never be forgotten nor will its contribution to the revolutionized pre-modern drug discovery and development industry. It was the worst medical disaster in history and was a culmination of many preventable factors and one may even have considered it an event waiting to happen. It highlighted the need for stringent testing and control of the sale of medication and though a calamity, forced an improvement upon the world leading to the effective safety network of drugs which we appreciate today, almost 60 years on.